My

earlier work on Streamlined NTRU Prime has been progressing along. The IETF document on

sntrup761 in SSH has passed several process points. GnuPG s

libgcrypt has added support for sntrup761. The

libssh support for sntrup761 is working, but the merge request is stuck mostly due to lack of time to debug why the regression test suite sporadically errors out in non-

sntrup761 related parts with the patch.

The foundation for

lattice-based post-quantum algorithms has some uncertainty around it, and I have felt that there is more to the post-quantum story than adding

sntrup761 to implementations.

Classic McEliece has been mentioned to me a couple of times, and I took some time to learn it and did a cut n paste job of the

proposed ISO standard and published

draft-josefsson-mceliece in the IETF to make the algorithm easily available to the IETF community. A high-quality implementation of Classic McEliece has been published as

libmceliece and I ve been supporting the work of Jan Moj to

package libmceliece for Debian, alas it has been stuck in the

ftp-master NEW queue for manual review for over two months. The pre-dependencies

librandombytes and

libcpucycles are available in Debian already.

All that text writing and packaging work set the scene to write some code. When I added support for

sntrup761 in

libssh, I became familiar with the

OpenSSH code base, so it was natural to return to OpenSSH to experiment with a new SSH KEX for Classic McEliece.

DJB suggested to pick

mceliece6688128 and combine it with the existing

X25519+sntrup761 or with plain

X25519. While a three-algorithm hybrid between

X25519,

sntrup761 and

mceliece6688128 would be a simple drop-in for those that don t want to lose the benefits offered by sntrup761, I decided to start the journey on a pure combination of

X25519 with

mceliece6688128. The key combiner in

sntrup761x25519 is a simple

SHA512 call and the only good I can say about that is that it is simple to describe and implement, and doesn t raise too many questions since it is already deployed.

After procrastinating coding for months, once I sat down to work it only took a couple of hours until I had a successful Classic McEliece SSH connection. I suppose my brain had sorted everything in background before I started. To reproduce it, please try the following in a Debian testing environment (I use

podman to get a clean environment).

# podman run -it --rm debian:testing-slim

apt update

apt dist-upgrade -y

apt install -y wget python3 librandombytes-dev libcpucycles-dev gcc make git autoconf libz-dev libssl-dev

cd ~

wget -q -O- https://lib.mceliece.org/libmceliece-20230612.tar.gz tar xfz -

cd libmceliece-20230612/

./configure

make install

ldconfig

cd ..

git clone https://gitlab.com/jas/openssh-portable

cd openssh-portable

git checkout jas/mceliece

autoreconf

./configure # verify 'libmceliece support: yes'

make # CC="cc -DDEBUG_KEX=1 -DDEBUG_KEXDH=1 -DDEBUG_KEXECDH=1"

You should now have a working SSH client and server that supports Classic McEliece! Verify support by running

./ssh -Q kex and it should mention

mceliece6688128x25519-sha512@openssh.com.

To have it print plenty of debug outputs, you may remove the

# character on the final line, but don t use such a build in production.

You can test it as follows:

./ssh-keygen -A # writes to /usr/local/etc/ssh_host_...

# setup public-key based login by running the following:

./ssh-keygen -t rsa -f ~/.ssh/id_rsa -P ""

cat ~/.ssh/id_rsa.pub > ~/.ssh/authorized_keys

adduser --system sshd

mkdir /var/empty

while true; do $PWD/sshd -p 2222 -f /dev/null; done &

./ssh -v -p 2222 localhost -oKexAlgorithms=mceliece6688128x25519-sha512@openssh.com date

On the client you should see output like this:

OpenSSH_9.5p1, OpenSSL 3.0.11 19 Sep 2023

...

debug1: SSH2_MSG_KEXINIT sent

debug1: SSH2_MSG_KEXINIT received

debug1: kex: algorithm: mceliece6688128x25519-sha512@openssh.com

debug1: kex: host key algorithm: ssh-ed25519

debug1: kex: server->client cipher: chacha20-poly1305@openssh.com MAC: <implicit> compression: none

debug1: kex: client->server cipher: chacha20-poly1305@openssh.com MAC: <implicit> compression: none

debug1: expecting SSH2_MSG_KEX_ECDH_REPLY

debug1: SSH2_MSG_KEX_ECDH_REPLY received

debug1: Server host key: ssh-ed25519 SHA256:YognhWY7+399J+/V8eAQWmM3UFDLT0dkmoj3pIJ0zXs

...

debug1: Host '[localhost]:2222' is known and matches the ED25519 host key.

debug1: Found key in /root/.ssh/known_hosts:1

debug1: rekey out after 134217728 blocks

debug1: SSH2_MSG_NEWKEYS sent

debug1: expecting SSH2_MSG_NEWKEYS

debug1: SSH2_MSG_NEWKEYS received

debug1: rekey in after 134217728 blocks

...

debug1: Sending command: date

debug1: pledge: fork

debug1: permanently_set_uid: 0/0

Environment:

USER=root

LOGNAME=root

HOME=/root

PATH=/usr/bin:/bin:/usr/sbin:/sbin:/usr/local/bin

MAIL=/var/mail/root

SHELL=/bin/bash

SSH_CLIENT=::1 46894 2222

SSH_CONNECTION=::1 46894 ::1 2222

debug1: client_input_channel_req: channel 0 rtype exit-status reply 0

debug1: client_input_channel_req: channel 0 rtype eow@openssh.com reply 0

Sat Dec 9 22:22:40 UTC 2023

debug1: channel 0: free: client-session, nchannels 1

Transferred: sent 1048044, received 3500 bytes, in 0.0 seconds

Bytes per second: sent 23388935.4, received 78108.6

debug1: Exit status 0

Notice the

kex: algorithm: mceliece6688128x25519-sha512@openssh.com output.

How about network bandwidth usage? Below is a comparison of a complete SSH client connection such as the one above that log in and print date and logs out. Plain

X25519 is around 7kb,

X25519 with

sntrup761 is around 9kb, and

mceliece6688128 with

X25519 is around 1MB. Yes, Classic McEliece has large keys, but for many environments, 1MB of data for the session establishment will barely be noticeable.

./ssh -v -p 2222 localhost -oKexAlgorithms=curve25519-sha256 date 2>&1 grep ^Transferred

Transferred: sent 3028, received 3612 bytes, in 0.0 seconds

./ssh -v -p 2222 localhost -oKexAlgorithms=sntrup761x25519-sha512@openssh.com date 2>&1 grep ^Transferred

Transferred: sent 4212, received 4596 bytes, in 0.0 seconds

./ssh -v -p 2222 localhost -oKexAlgorithms=mceliece6688128x25519-sha512@openssh.com date 2>&1 grep ^Transferred

Transferred: sent 1048044, received 3764 bytes, in 0.0 seconds

So how about session establishment time?

date; i=0; while test $i -le 100; do ./ssh -v -p 2222 localhost -oKexAlgorithms=curve25519-sha256 date > /dev/null 2>&1; i= expr $i + 1 ; done; date

Sat Dec 9 22:39:19 UTC 2023

Sat Dec 9 22:39:25 UTC 2023

# 6 seconds

date; i=0; while test $i -le 100; do ./ssh -v -p 2222 localhost -oKexAlgorithms=sntrup761x25519-sha512@openssh.com date > /dev/null 2>&1; i= expr $i + 1 ; done; date

Sat Dec 9 22:39:29 UTC 2023

Sat Dec 9 22:39:38 UTC 2023

# 9 seconds

date; i=0; while test $i -le 100; do ./ssh -v -p 2222 localhost -oKexAlgorithms=mceliece6688128x25519-sha512@openssh.com date > /dev/null 2>&1; i= expr $i + 1 ; done; date

Sat Dec 9 22:39:55 UTC 2023

Sat Dec 9 22:40:07 UTC 2023

# 12 seconds

I never noticed adding

sntrup761, so I m pretty sure I wouldn t notice this increase either. This is all running on

my laptop that runs Trisquel so take it with a grain of salt but at least the magnitude is clear.

Future work items include:

- Use a better hybrid mode combiner than SHA512? See for example KEM Combiners.

- Write IETF document describing the Classic McEliece SSH protocol

- Submit my patch to the OpenSSH community for discussion and feedback, please review meanwhile!

- Implement a mceliece6688128sntrup761x25519 multi-hybrid mode?

- Write a shell script a la sntrup761.sh to import a stripped-down mceliece6688128 implementation to avoid having OpenSSH depend on libmceliece?

- My kexmceliece6688128x25519.c is really just kexsntrup761x25519.c with the three calls to sntrup761 replaced with mceliece6688128. This should be parametrized to avoid cut n paste of code, if the OpenSSH community is interested.

- Consider if the behaviour of librandombytes related to closing of file descriptors is relevant to OpenSSH.

Happy post-quantum SSH ing!

Update: Changing the mceliece6688128_keypair call to mceliece6688128f_keypair (i.e., using the fully compatible f-variant) results in McEliece being just as fast as

sntrup761 on my machine.

Update 2023-12-26: An initial IETF document

draft-josefsson-ssh-mceliece-00 published.

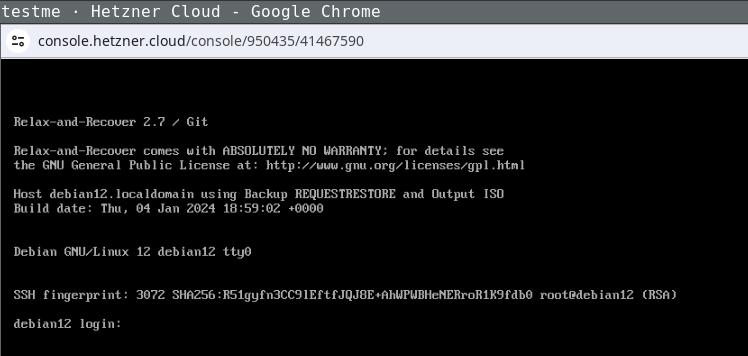

Now copy the ISO image to the newly created instance and extract

its data:

Now copy the ISO image to the newly created instance and extract

its data:

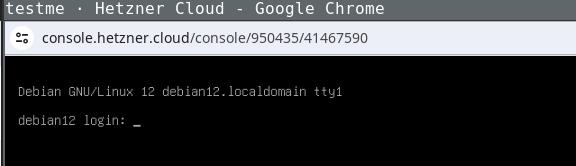

Login the recovery console (root without password) and fix its default route to

make it reachable:

Login the recovery console (root without password) and fix its default route to

make it reachable:

Being able to use this procedure for complete disaster recovery within Hetzner

cloud VPS (using off-site backups) gives me a better feeling, too.

Being able to use this procedure for complete disaster recovery within Hetzner

cloud VPS (using off-site backups) gives me a better feeling, too.

Today, the animation figure Mickey Mouse finally was released from

the corporate copyright prison, as the 1928 movie

Today, the animation figure Mickey Mouse finally was released from

the corporate copyright prison, as the 1928 movie

This post describes how I m using

This post describes how I m using

Some years ago a customer needed a live ISO containing a customized

FAI environment (not for installing but for extended hardware stress

tests), but on an USB stick with the possibility to store the logs of

the tests on the USB stick. But an ISO file system (iso9660) remains

read-only, even when put onto an USB stick.

I had the idea to add another partition onto the USB stick after

the ISO was written to it (using cp or dd).

You can use fdisk with an ISO file, add a new partition, loop mount

the ISO and format this partition. That's all. This worked perfect for my customer.

I forgot this idea for a while but a few weeks ago I remembered it.

What could be possible when my FAI (Fully Automatic Installation)

image would also provide such a partition? Which things could be

provided on this partition?

Could I provide a FAI ISO and my users would be able to easily put

their own .deb package onto it without remastering the ISO or building

an ISO on their own?

Now here's the shell script, that extends an ISO or an USB stick with an

ext4 or exFAT partition and set the file system label to MY-DATA.

Some years ago a customer needed a live ISO containing a customized

FAI environment (not for installing but for extended hardware stress

tests), but on an USB stick with the possibility to store the logs of

the tests on the USB stick. But an ISO file system (iso9660) remains

read-only, even when put onto an USB stick.

I had the idea to add another partition onto the USB stick after

the ISO was written to it (using cp or dd).

You can use fdisk with an ISO file, add a new partition, loop mount

the ISO and format this partition. That's all. This worked perfect for my customer.

I forgot this idea for a while but a few weeks ago I remembered it.

What could be possible when my FAI (Fully Automatic Installation)

image would also provide such a partition? Which things could be

provided on this partition?

Could I provide a FAI ISO and my users would be able to easily put

their own .deb package onto it without remastering the ISO or building

an ISO on their own?

Now here's the shell script, that extends an ISO or an USB stick with an

ext4 or exFAT partition and set the file system label to MY-DATA.

Nextcloud is a popular self-hosted solution for file sync and share as well as cloud apps such as document editing, chat and talk, calendar, photo gallery etc. This guide will walk you through setting up Nextcloud AIO using Docker Compose. This blog post would not be possible without immense help from Sahil Dhiman a.k.a.

Nextcloud is a popular self-hosted solution for file sync and share as well as cloud apps such as document editing, chat and talk, calendar, photo gallery etc. This guide will walk you through setting up Nextcloud AIO using Docker Compose. This blog post would not be possible without immense help from Sahil Dhiman a.k.a.